Installation Guides¶

This document describes how to install the RIC components deployed by scripts and Helm charts under the it/dep repository, including the dependencies and required system resources.

Version history¶

| Date | Ver. | Author | Comment |

| 2019-11-25 | 0.1.0 | Lusheng Ji | Amber |

| 2020-01-23 | 0.2.0 | Zhe Huang | Bronze RC |

Overview¶

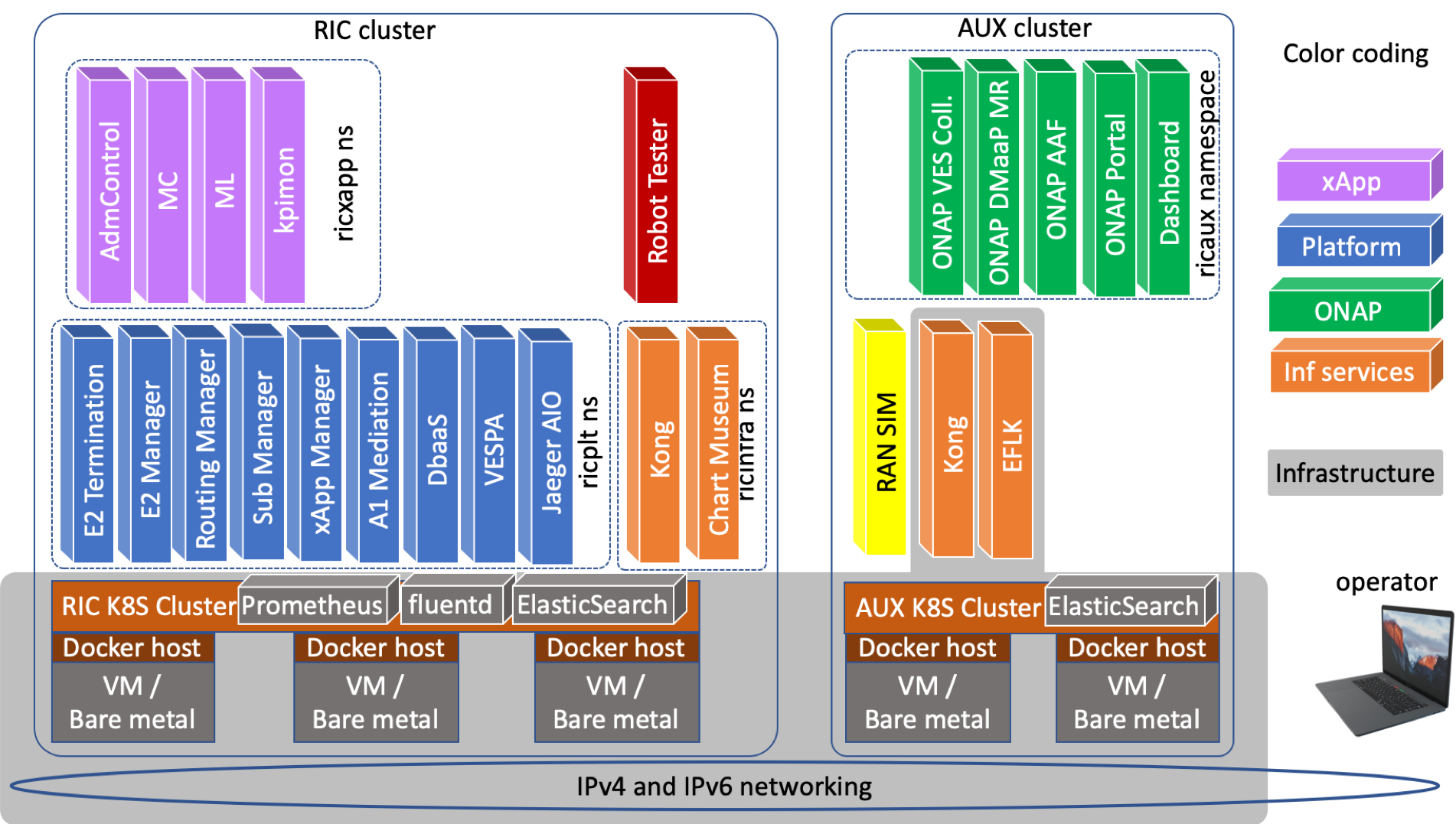

The installation of Near Realtime RAN Intelligent Controller is spread onto two separate Kubernetes clusters. The first cluster is used for deploying the Near Realtime RIC (platform and applications), and the other is for deploying other auxiliary functions. They are referred to as RIC cluster and AUX cluster respectively.

The following diagram depicts the installation architecture.

Within the RIC cluster, Kubernetes resources are deployed using three name spaces: ricinfra, ricplt, and ricxapp by default. Similarly, within the AUX cluster, Kubernetes resources are deployed using two name spaces: ricinfra, and ricaux.

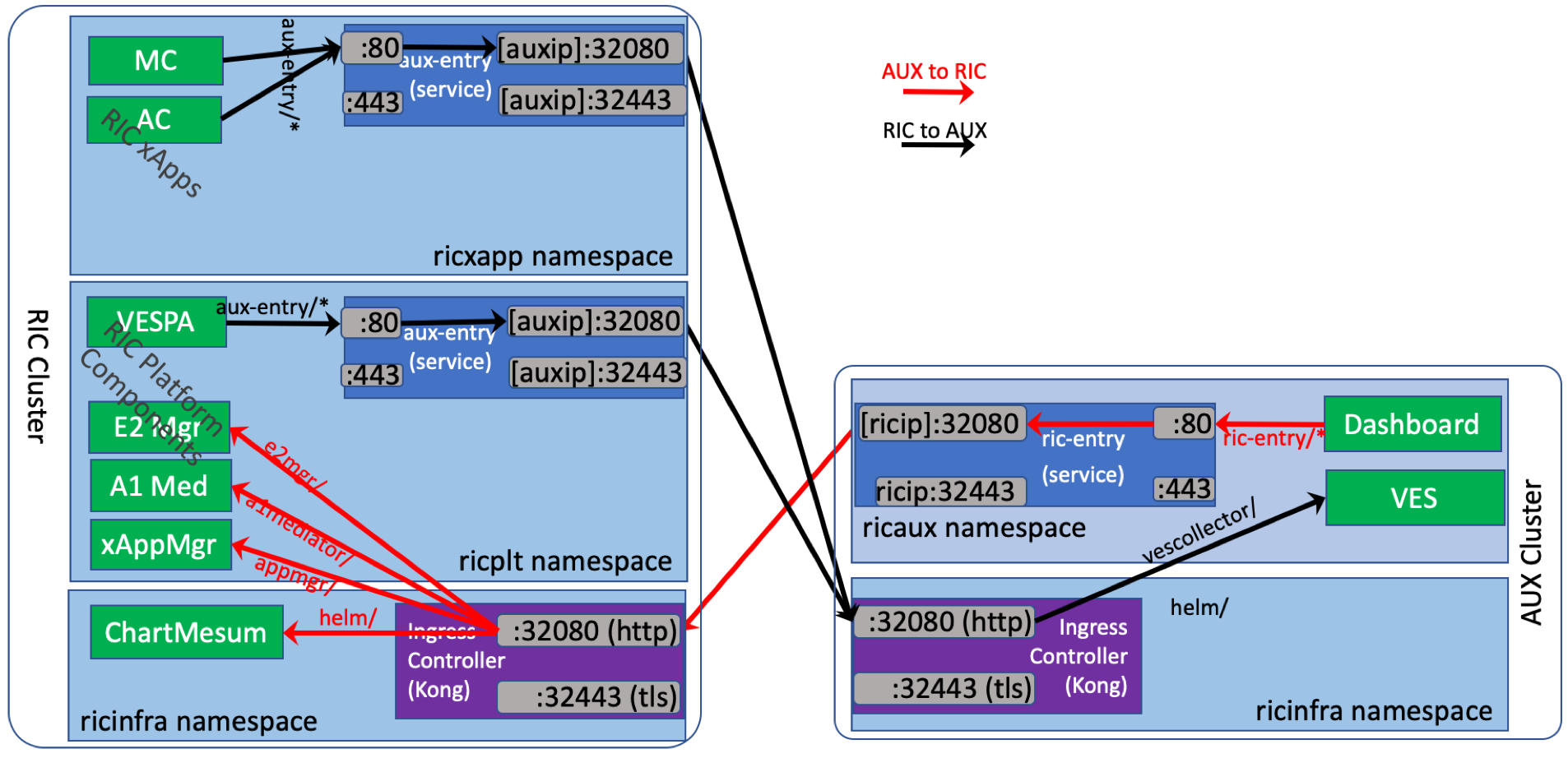

For each cluster, there is a Kong ingress controller that proxies incoming API calls into the cluster. With Kong, service APIs provided by Kubernetes resources can be accessed at the cluster node IP and port via a URL path. For cross-cluster communication, in addition to Kong, each Kubernetes namespace has a special Kubernetes service defined with an endpoint pointing to the other cluster’s Kong. This way any pod can access services exposed at the other cluster via the internal service hostname and port of this special service. The figure below illustrates the details of how Kong and external services work together to realize cross-cluster communication.

Prerequisites¶

Both RIC and AUX clusters need to fulfill the following prerequisites.

- Kubernetes v.1.16.0 or above

- helm v2.12.3 or above

- Read-write access to directory /mnt

The following two sections show two example methods to create an environment for installing RIC.

VirtualBox VMs as Installation Hosts¶

The deployment of Near Realtime RIC can be done on a wide range of hosts, including bare metal servers, OpenStack VMs, and VirtualBox VMs. This section provides detailed instructions for setting up Oracle VirtualBox VMs to be used as installation hosts.

Networking¶

The set up requires two VMs connected by a private network. With VirtualBox, this can be done by going under its “Preferences” menu and setting up a private NAT network.

- Pick “Preferences”, then select the “Network” tab;

- Click on the “+” icon to create a new NAT network. A new entry will appear in the NAT networks list

- Double click on the new network to edit its details; give it a name such as “RICNetwork”

- In the dialog, make sure to check the “Enable Network” box, uncheck the “Supports DHCP” box, and make a note of the “Network CIDR” (for this example, it is 10.0.2.0/24);

- Click on the “Port Forwarding” button then in the table create the following rules:

- “ssh to ric”, TCP, 127.0.0.1, 22222, 10.0.2.100, 22;

- “ssh to aux”, TCP, 127.0.0.1, 22223, 10.0.2.101, 22;

- “entry to ric”, TCP, 127.0.0.1, 22224, 10.0.2.100, 32080;

- “entry to aux”, TCP, 127.0.0.1, 22225, 10.0.2.101, 32080.

- Click “Ok” all the way back to create the network.

Creating VMs¶

Create a VirtualBox VM:

- “New”, then enter the following in the pop-up: Name it for example myric, of “Linux” type, and at least 6G RAM and 20G disk;

- “Create” to create the VM. It will appear in the list of VMs.

- Highlight the new VM entry, right click on it, select “Settings”.

- Under the “System” tab, then “Processor” tab, make sure to give the VM at least 2 vCPUs.

- Under the “Storage” tab, point the CD to a Ubuntu 18.04 server ISO file;

- Under the “Network” tab, then “Adapter 1” tab, make sure to:

- Check “Enable Network Adapter”;

- Attached to “NAT Network”;

- Select the Network that was created in the previous section: “RICNetwork”.

Repeat the process and create the second VM named myaux.

Booting VM and OS Installation¶

Follow the OS installation steps to install OS to the VM virtual disk media. During the setup you must configure static IP addresses as discussed next. And make sure to install openssh server.

VM Network Configuration¶

Depending on the version of the OS, the networking may be configured during the OS installation or after. The network interface is configured with a static IP address:

- IP Address: 10.0.2.100 for myric or 10.0.2.101 for myaux;

- Subnet 10.0.2.0/24, or network mask 255.255.255.0

- Default gateway: 10.0.2.1

- Name server: 8.8.8.8; if access to that is is blocked, configure a local DNS server

Accessing the VMs¶

Because of the port forwarding configurations, the VMs are accessible from the VirtualBox host via ssh.

- To access myric: ssh {{USERNAME}}@127.0.0.1 -p 22222

- To access myaux: ssh {{USERNAME}}@127.0.0.1 -p 22223

One-Node Kubernetes Cluster¶

This section describes how to set up a one-node Kubernetes cluster onto a VM installation host.

Script for Setting Up 1-node Kubernetes Cluster¶

The it/dep repo can be used for generating a simple script that can help setting up a one-node Kubernetes cluster for dev and testing purposes. Related files are under the tools/k8s/bin directory. Clone the repository on the target VM:

% git clone https://gerrit.o-ran-sc.org/r/it/dep

Configurations¶

The generation of the script reads in the parameters from the following files:

- etc/env.rc: Normally no change needed for this file. If where the Kubernetes cluster runs has special requirements, such as running private Docker registry with self-signed certificates, or hostnames that can be only resolved via private /etc/hosts entries, such parameters are entered into this file.

- etc/infra.rc: This file specifies the docker host, Kubernetes, and Kubernetes CNI versions. If a version is left empty, the installation will use the default version that the OS package management software would install.

- etc/openstack.rc: If the Kubernetes cluster is deployed on Open Stack VMs, this file specifies parameters for accessing the APIs of the Open Stack installation. This is not supported in Amber release yet.

Generating Set-up Script¶

After the configurations are updated, the following steps will create a script file that can be used for setting up a one-node Kubernetes cluster. You must run this command on a Linux machine with the ‘envsubst’ command installed.

% cd tools/k8s/bin

% ./gen-cloud-init.sh

A file named k8s-1node-cloud-init.sh would now appear under the bin directory.

Setting up Kubernetes Cluster¶

The new k8s-1node-cloud-init.sh file is now ready for setting up the Kubernetes cluster.

It can be run from a root shell of an existing Ubuntu 16.04 or 18.04 VM. Running this script will replace any existing installation of Docker host, Kubernetes, and Helm on the VM. The script will reboot the machine upon successful completion. Run the script like this:

% sudo -i

# ./k8s-1node-cloud-init.sh

This script can also be used as the user-data (a.k.a. cloud-init script) supplied to Open Stack when launching a new Ubuntu 16.04 or 18.04 VM.

Upon successful execution of the script and reboot of the machine, when queried in a root shell with the kubectl command the VM should display information similar to below:

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5644d7b6d9-4gjp5 1/1 Running 0 103m

kube-system coredns-5644d7b6d9-pvsj8 1/1 Running 0 103m

kube-system etcd-ljitest 1/1 Running 0 102m

kube-system kube-apiserver-ljitest 1/1 Running 0 103m

kube-system kube-controller-manager-ljitest 1/1 Running 0 102m

kube-system kube-flannel-ds-amd64-nvjmq 1/1 Running 0 103m

kube-system kube-proxy-867v5 1/1 Running 0 103m

kube-system kube-scheduler-ljitest 1/1 Running 0 102m

kube-system tiller-deploy-68bf6dff8f-6pwvc 1/1 Running 0 102m

Installing Near Realtime RIC in RIC Cluster¶

After the Kubernetes cluster is installed, the next step is to install the (Near Realtime) RIC Platform.

Getting and Preparing Deployment Scripts¶

Clone the it/dep git repository that has deployment scripts and support files on the target VM. (You might have already done this in a previous step.)

% git clone https://gerrit.o-ran-sc.org/r/it/dep

Check out the appropriate branch of the repository with the release you want to deploy. For example:

git clone https://gerrit.o-ran-sc.org/r/it/dep

cd dep

git submodule update --init --recursive --remote

Modify the deployment recipe¶

Edit the recipe files ./RECIPE_EXAMPLE/PLATFORM/example_recipe.yaml.

- Specify the IP addresses used by the RIC and AUX cluster ingress controller (e.g., the main interface IP) in the following section. If you do not plan to set up an AUX cluster, you can put down any private IPs (e.g., 10.0.2.1 and 10.0.2.2).

extsvcplt:

ricip: ""

auxip: ""

- To specify which version of the RIC platform components will be deployed, update the RIC platform component container tags in their corresponding section.

- You can specify which docker registry will be used for each component. If the docker registry requires login credential, you can add the credential in the following section. Please note that the installation suite has already included credentials for O-RAN Linux Foundation docker registries. Please do not create duplicate entries.

docker-credential:

enabled: true

credential:

SOME_KEY_NAME:

registry: ""

credential:

user: ""

password: ""

email: ""

For more advanced recipe configuration options, please refer to the recipe configuration guideline.

Deploying the Infrastructure and Platform Groups¶

After the recipes are edited, the Near Realtime RIC platform is ready to be deployed.

cd dep/bin

./deploy-ric-platform ../RECIPE_EXAMPLE/PLATFORM/example_recipe.yaml

Checking the Deployment Status¶

Now check the deployment status after a short wait. Results similar to the output shown below indicate a complete and successful deployment. Check the STATUS column from both kubectl outputs to ensure that all are either “Completed” or “Running”, and that none are “Error” or “ImagePullBackOff”.

# helm list

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

r3-a1mediator 1 Thu Jan 23 14:29:12 2020 DEPLOYED a1mediator-3.0.0 1.0 ricplt

r3-appmgr 1 Thu Jan 23 14:28:14 2020 DEPLOYED appmgr-3.0.0 1.0 ricplt

r3-dbaas1 1 Thu Jan 23 14:28:40 2020 DEPLOYED dbaas1-3.0.0 1.0 ricplt

r3-e2mgr 1 Thu Jan 23 14:28:52 2020 DEPLOYED e2mgr-3.0.0 1.0 ricplt

r3-e2term 1 Thu Jan 23 14:29:04 2020 DEPLOYED e2term-3.0.0 1.0 ricplt

r3-infrastructure 1 Thu Jan 23 14:28:02 2020 DEPLOYED infrastructure-3.0.0 1.0 ricplt

r3-jaegeradapter 1 Thu Jan 23 14:29:47 2020 DEPLOYED jaegeradapter-3.0.0 1.0 ricplt

r3-rsm 1 Thu Jan 23 14:29:39 2020 DEPLOYED rsm-3.0.0 1.0 ricplt

r3-rtmgr 1 Thu Jan 23 14:28:27 2020 DEPLOYED rtmgr-3.0.0 1.0 ricplt

r3-submgr 1 Thu Jan 23 14:29:23 2020 DEPLOYED submgr-3.0.0 1.0 ricplt

r3-vespamgr 1 Thu Jan 23 14:29:31 2020 DEPLOYED vespamgr-3.0.0 1.0 ricplt

# kubectl get pods -n ricplt

NAME READY STATUS RESTARTS AGE

deployment-ricplt-a1mediator-69f6d68fb4-7trcl 1/1 Running 0 159m

deployment-ricplt-appmgr-845d85c989-qxd98 2/2 Running 0 160m

deployment-ricplt-dbaas-7c44fb4697-flplq 1/1 Running 0 159m

deployment-ricplt-e2mgr-569fb7588b-wrxrd 1/1 Running 0 159m

deployment-ricplt-e2term-alpha-db949d978-rnd2r 1/1 Running 0 159m

deployment-ricplt-jaegeradapter-585b4f8d69-tmx7c 1/1 Running 0 158m

deployment-ricplt-rsm-755f7c5c85-j7fgf 1/1 Running 0 158m

deployment-ricplt-rtmgr-c7cdb5b58-2tk4z 1/1 Running 0 160m

deployment-ricplt-submgr-5b4864dcd7-zwknw 1/1 Running 0 159m

deployment-ricplt-vespamgr-864f95c9c9-5wth4 1/1 Running 0 158m

r3-infrastructure-kong-68f5fd46dd-lpwvd 2/2 Running 3 160m

# kubectl get pods -n ricinfra

NAME READY STATUS RESTARTS AGE

deployment-tiller-ricxapp-d4f98ff65-9q6nb 1/1 Running 0 163m

tiller-secret-generator-plpbf 0/1 Completed 0 163m

Checking Container Health¶

Check the health of the application manager platform component by querying it via the ingress controller using the following command.

% curl -v http://localhost:32080/appmgr/ric/v1/health/ready

The output should look as follows.

* Trying 10.0.2.100...

* TCP_NODELAY set

* Connected to 10.0.2.100 (10.0.2.100) port 32080 (#0)

> GET /appmgr/ric/v1/health/ready HTTP/1.1

> Host: 10.0.2.100:32080

> User-Agent: curl/7.58.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Content-Type: application/json

< Content-Length: 0

< Connection: keep-alive

< Date: Wed, 22 Jan 2020 20:55:39 GMT

< X-Kong-Upstream-Latency: 0

< X-Kong-Proxy-Latency: 2

< Via: kong/1.3.1

<

* Connection #0 to host 10.0.2.100 left intact

Undeploying the Infrastructure and Platform Groups¶

To undeploy all the containers, perform the following steps in a root shell within the it-dep repository.

# cd bin

# ./undeploy-ric-platform

Results similar to below indicate a complete and successful cleanup.

# ./undeploy-ric-platform

Undeploying RIC platform components [appmgr rtmgr dbaas1 e2mgr e2term a1mediator submgr vespamgr rsm jaegeradapter infrastructure]

release "r3-appmgr" deleted

release "r3-rtmgr" deleted

release "r3-dbaas1" deleted

release "r3-e2mgr" deleted

release "r3-e2term" deleted

release "r3-a1mediator" deleted

release "r3-submgr" deleted

release "r3-vespamgr" deleted

release "r3-rsm" deleted

release "r3-jaegeradapter" deleted

release "r3-infrastructure" deleted

configmap "ricplt-recipe" deleted

namespace "ricxapp" deleted

namespace "ricinfra" deleted

namespace "ricplt" deleted

Restarting the VM¶

After a reboot of the VM, and a suitable delay for initialization, all the containers should be running again as shown above.

Installing Auxiliary Functions in AUX Cluster¶

Resource Requirements¶

To run the RIC-AUX cluster in a dev testing setting, the minimum requirement for resources is a VM with 4 vCPUs, 16G RAM and at least 40G of disk space.

Getting and Preparing Deployment Scripts¶

Run the following commands in a root shell:

git clone https://gerrit.o-ran-sc.org/r/it/dep

cd dep

git submodule update --init --recursive --remote

Modify the deployment recipe¶

Edit the recipe file ./RECIPE_EXAMPLE/AUX/example_recipe.yaml.

- Specify the IP addresses used by the RIC and AUX cluster ingress controller (e.g., the main interface IP) in the following section. If you are only testing the AUX cluster, you can put down any private IPs (e.g., 10.0.2.1 and 10.0.2.2).

extsvcplt:

ricip: ""

auxip: ""

- To specify which version of the RIC platform components will be deployed, update the RIC platform component container tags in their corresponding section.

- You can specify which docker registry will be used for each component. If the docker registry requires login credential, you can add the credential in the following section. Note that the installation script has already included credentials for O-RAN Linux Foundation docker registries. Please do not create duplicate entries.

docker-credential:

enabled: true

credential:

SOME_KEY_NAME:

registry: ""

credential:

user: ""

password: ""

email: ""

For more advanced recipe configuration options, refer to the recipe configuration guideline.

Deploying the Aux Group¶

After the recipes are edited, the AUX group is ready to be deployed.

cd dep/bin

./deploy-ric-aux ../RECIPE_EXAMPLE/AUX/example_recipe.yaml

Checking the Deployment Status¶

Now check the deployment status and results similar to the below indicate a complete and successful deployment.

# helm list

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

r3-aaf 1 Mon Jan 27 13:24:59 2020 DEPLOYED aaf-5.0.0 onap

r3-dashboard 1 Mon Jan 27 13:22:52 2020 DEPLOYED dashboard-1.2.2 1.0 ricaux

r3-infrastructure 1 Mon Jan 27 13:22:44 2020 DEPLOYED infrastructure-3.0.0 1.0 ricaux

r3-mc-stack 1 Mon Jan 27 13:23:37 2020 DEPLOYED mc-stack-0.0.1 1 ricaux

r3-message-router 1 Mon Jan 27 13:23:09 2020 DEPLOYED message-router-1.1.0 ricaux

r3-mrsub 1 Mon Jan 27 13:23:24 2020 DEPLOYED mrsub-0.1.0 1.0 ricaux

r3-portal 1 Mon Jan 27 13:24:12 2020 DEPLOYED portal-5.0.0 ricaux

r3-ves 1 Mon Jan 27 13:23:01 2020 DEPLOYED ves-1.1.1 1.0 ricaux

# kubectl get pods -n ricaux

NAME READY STATUS RESTARTS AGE

deployment-ricaux-dashboard-f78d7b556-m5nbw 1/1 Running 0 6m30s

deployment-ricaux-ves-69db8c797-v9457 1/1 Running 0 6m24s

elasticsearch-master-0 1/1 Running 0 5m36s

r3-infrastructure-kong-7697bccc78-nsln7 2/2 Running 3 6m40s

r3-mc-stack-kibana-78f648bdc8-nfw48 1/1 Running 0 5m37s

r3-mc-stack-logstash-0 1/1 Running 0 5m36s

r3-message-router-message-router-0 1/1 Running 3 6m11s

r3-message-router-message-router-kafka-0 1/1 Running 1 6m11s

r3-message-router-message-router-kafka-1 1/1 Running 2 6m11s

r3-message-router-message-router-kafka-2 1/1 Running 1 6m11s

r3-message-router-message-router-zookeeper-0 1/1 Running 0 6m11s

r3-message-router-message-router-zookeeper-1 1/1 Running 0 6m11s

r3-message-router-message-router-zookeeper-2 1/1 Running 0 6m11s

r3-mrsub-5c94f5b8dd-wxcw5 1/1 Running 0 5m58s

r3-portal-portal-app-8445f7f457-dj4z8 2/2 Running 0 4m53s

r3-portal-portal-cassandra-79cf998f69-xhpqg 1/1 Running 0 4m53s

r3-portal-portal-db-755b7dc667-kjg5p 1/1 Running 0 4m53s

r3-portal-portal-db-config-bfjnc 2/2 Running 0 4m53s

r3-portal-portal-zookeeper-5f8f77cfcc-t6z7w 1/1 Running 0 4m53s

Installing RIC Applications¶

Loading xApp Helm Charts¶

The RIC Platform App Manager deploys RIC applications (a.k.a. xApps) using Helm charts stored in a private local Helm repo. The Helm local repo is deployed as a sidecar of the App Manager pod, and its APIs are exposed using an ingress controller with TLS enabled. You can use both HTTP and HTTPS to access it.

Before any xApp can be deployed, its Helm chart must be loaded into this private Helm repo. The example below shows the command sequences that upload and delete the xApp Helm charts:

- Load the xApp Helm charts into the Helm repo;

- Verify the xApp Helm charts;

- Call App Manager to deploy the xApp;

- Call App Manager to delete the xApp;

- Delete the xApp helm chart from the private Helm repo.

In the following example, the <INGRESS_CONTROLLER_IP> is the IP address that the RIC cluster ingress controller is listening to. If you are using a VM, it is the IP address of the main interface. If you are using REC clusters, it is the DANM network IP address you assigned in the recipe. If the commands are executed inside the host machine, you can use “localhost” as the <INGRESS_CONTROLLER_IP>.

# load admin-xapp Helm chart to the Helm repo

curl -L --data-binary "@admin-xapp-1.0.7.tgz" http://<INGRESS_CONTROLLER_IP>:32080/helmrepo

# verify the xApp Helm charts

curl -L http://<INGRESS_CONTROLLER_IP>:32080/helmrepo/index.yaml

# test App Manager health check API

curl -v http://<INGRESS_CONTROLLER_IP>:32080/appmgr/ric/v1/health/ready

# expecting a 200 response

# list deployed xApps

curl http://<INGRESS_CONTROLLER_IP>:32080/appmgr/ric/v1/xapps

# expecting a []

# deploy xApp, the xApp name has to be the same as the xApp Helm chart name

curl -X POST http://<INGRESS_CONTROLLER_IP>/appmgr/ric/v1/xapps -d '{"name": "admin-xapp"}'

# expecting: {"name":"admin-app","status":"deployed","version":"1.0","instances":null}

# check again deployed xApp

curl http://<INGRESS_CONTROLLER_IP>:32080/appmgr/ric/v1/xapps

# expecting a JSON array with an entry for admin-app

# check pods using kubectl

kubectl get pods --all-namespaces

# expecting the admin-xapp pod showing up

# underlay the xapp

curl -X DELETE http://<INGRESS_CONTROLLER_IP>:32080/appmgr/ric/v1/xapps/admin-xapp

# check pods using kubectl

kubectl get pods --all-namespaces

# expecting the admin-xapp pod gone or shown as terminating

# to delete a chart

curl -L -X DELETE -u helm:helm http://<INGRESS_CONTROLLER_IP>:32080/api/charts/admin-xapp/0.0.5

For more xApp deployment and usage examples, please see the documentation for the it/test repository.